FreeBSD Broadcom Wi-Fi Improvements

07 Feb 2018, 17:13 PSTIntroduction

Since 2015, I've been working on improving FreeBSD support for Broadcom Wi-Fi devices and SoCs, including authoring the bhnd(4) driver family, which provides a unified bus and driver programming interface for these devices.

First committed in early 2016, bhnd(4) allowed us to quickly bring up FreeBSD/MIPS on Broadcom SoCs, but it has taken much longer to implement the full set of features required to support modern Broadcom SoftMAC Wi-Fi hardware.

Thanks to the generosity of the FreeBSD Foundation, I've recently finished implementing the necessary improvements to the bhnd(4) driver family. With these changes in place, I was finally able to port the existing bwn(4) Broadcom SoftMAC Wi-Fi driver to the bhnd(4) bus, and implement initial support for the BCM43224 and BCM43225 chipsets, with additional hardware support to be forthcoming.

Now that my efforts on FreeBSD/Broadcom Wi-Fi support have progressed far enough to be generally useful, I wanted to take some time to provide a brief overview of Broadcom's Wi-Fi hardware, and explain how my work provides a foundation for further FreeBSD Broadcom Wi-Fi/SoC improvements.

Unchaining Taskgated

14 Mar 2015, 13:18 PDTOn Mac OS X, application signing entitlements enforce client-side constraints on non-AppStore (or non-Apple-distributed) applications. This is used, for instance, to prevent a non-AppStore application from using the MapKit APIs.

Recently, I wanted to backport Xcode 6.3 from Yosemite, getting it running on Mavericks. Unfortunately, simply stripping signatures wasn't an option -- Xcode itself transitively depends on code signing via its use of XPC.

It's also not possible to simply resign a modified Xcode binary with a local adhoc certificate (or a standard paid Mac Developer certificate); Xcode relies on Apple-privileged entitlements -- including the MapKit entitlement -- that aren't available without a trusted entitlement-granting provisioning profile.

These AppStore-only functionality constraints are enforced by the /usr/libexec/taskgated daemon; to work around it, I implemented task-unchain -- a small binary patch that may be applied to taskgated, disabling all checks for restricted entitlements.

XSmallTest Test DSL

06 Jun 2014, 08:46 PDTI just published beta sources for XSmallTest (XSM), a minimal single-header implementation of a test DSL that generates test cases compatible with Xcode's XCTest:

#import "XSmallTest.h"

xsm_given("an integer value") {

int v;

xsm_when("the value is 42") {

v = 42;

xsm_then("the value is the answer to the life, the universe, and everything") {

XCTAssertEqual(42, v);

}

}

}

I'll be gradually migrating some of my projects over; you can find the source code here.

No Exceptions: Checked Exceptions for Scala

13 Mar 2014, 06:27 PDTI decided to sit down and solve a problem that has troubled me since I first started working with Scala almost 6 years ago: Checked exceptions, and specifically, the lack thereof.

The result is NX ("No Exceptions") a Scala compiler plugin (compatible with 2.10 and 2.11) that provides support for Java-style checked exceptions:

test.scala:3: error: Unhandled exception type java.net.UnknownHostException; must be caught or declared

to be thrown. Consider the use of monadic error handling, such as scala.util.Either.

InetAddress.getByName("www.example.org")

^

one error found

There are some very strong opinions about checked exceptions; Personally, I do not want to see exceptions, checked or otherwise, become a standard Scala idiom. The double-meaning of the project name is intentional; in my book, the only thing worse than checked exceptions are unchecked exceptions, and I'd rather have no exceptions:

- Failure conditions are as much part of a function's type as its successful return value. There should be no exceptions to compiler type checking of something so fundamental.

- Exceptions are a terrible way to express error conditions. I always prefer monadic approaches such as Option and Either. There should be no exceptions used in non-Java code (and possibly even there).

That said, checked exception support has already found a number of bugs in our own code where we interface with Java APIs, and the impetus to finally tackle this issue was hitting a trivially machine-detectable bug in the Scala standard library; an issue that would have been flagged immediately by the Java compiler.

If Scala is going to support exceptions at all, I believe that compiler-checked exceptions are a net gain over unchecked exceptions. You don't have to take my word for it, though; the lack of compiler-checked exceptions in Scala provides a a unique opportunity to see what sort of bugs are discovered once automated validation is enabled -- especially when coupled with existing exception-checked Java code.

If you want to give NX a try, full installation and usage instructions are available via the NX home page.

PLPatchMaster: Swizzling in Style

17 Feb 2014, 14:56 PSTIntroduction

Last year, I wrote a swizzling API for my own use in patching Xcode; I wanted an easy way to define new method patches, and the API wound up being pretty nice:

[UIWindow pl_patchInstanceSelector: @selector(sendEvent:) withReplacementBlock: ^(PLPatchIMP *patch, UIEvent *event) {

NSObject *obj = PLPatchGetSelf(patch);

// Ignore 'remote control' events

if (event.type == UIEventTypeRemoteControl)

return;

// Forward everything else

return PLPatchIMPFoward(patch, void (*)(id, SEL, UIEvent *), event);

}];

In light of Mattt Thompson's post on Method Swizzling today, I figured I'd brush it off, port it to armv7/armv7s/arm64, and share it publicly.

PLPatchMaster uses the same block trampoline allocator I originally wrote for PLBlockIMP, along with a set of custom trampolines.

It's similar to imp_implementationWithBlock() in implementation and efficiency; rather than simply re-ordering 'self' and '_cmd', we pass in enough state to allow forwarding the message to the original receiver's implementation.

Use it at your own risk; swizzling in production software is rarely, if ever, a particularly good idea.

Advanced Use

The library can also be used to register patches to be applied to classes that have not yet been loaded:

[[PLPatchMaster master] patchFutureClassWithName: @"ExFATCameraDeviceManager" withReplacementBlock: ^(PLPatchIMP *patch, id *arg) {

/* Forward the message to the next IMP */

PLPatchIMPFoward(patch, void (*)(id, SEL, id *));

/* Log the event */

NSLog(@"FAT camera device ejected");

}];

PLPatchMaster registers a listener for dyld image events, and will automatically swizzle the target class when its Mach-O image is loaded.

Source Code

The soure code is available via the official repository, or via the GitHub mirror.

Tales From The Crash Mines

06 Feb 2014, 07:26 PSTOver at Mike Ash's blog, he's posted my first issue of "Tales From The Crash Mines". I'll be writing this as an intermittent series on the interesting bugs we've tracked down on iOS and Mac OS X, interpreting crash reports, and software failure analysis and crash reporting in general.

The first issue explores a pretty interesting and educational bug, with a focus on the methodologies we use to derive more data from a fairly tricky crash report, and in the process, reconstruct exactly how an application failed.

Check it out! If you prefer PDFs (or dead tree reading), I've also posted a nicely formatted PDF generated from the original LaTeX sources.

Fix iOS Frameworks (or GTFO?)

13 Jan 2014, 07:48 PSTIn response to my post on iOS Static Libraries, a number of people have asked for a radar they can easily submit to follow up with Apple. In the spirit of Fix Radar or GTFO, here's a template you can use to submit a bug asking Apple to fix the broken state of library distribution on iOS.

- Go to bugreport.apple.com, sign in and create a new issue

- Set the title to 'iOS Static Libraries Are Really Bad (duplicate of rdar://15800975)'

- Set the Product to "Developer Tools", Classification to "Feature (New)" and Is It Reproducible to "Not Applicable"

- Copy the template below and paste it in the relevant sections, then click 'Save'

Summary: The lack of support for frameworks/dylibs on iOS has become the status quo, and has been and continues to be enormously limiting and costly to the iOS development ecosystem, as described here: http://landonf.bikemonkey.org/code/ios/Radar_15800975_iOS_Frameworks.20140112.html and in rdar://15800975 Nearly 7 years after the introduction of iOS, it well past time for Apple to prioritize closing the feature gap between iOS and Mac toolchains. A real framework solution plays a central role in how we as third-party developers can share and deliver common code. Steps to Reproduce: Ship or consume 3rd party libraries on iOS. Expected Results: We can leverage the long-standing functionality of dylibs and frameworks as exists on Mac OS X. Actual Results: - Anyone distributing libraries has had to adopt hackish workarounds to facilitate their use by other developers - Anyone shipping resources to be bundled with their library have had to adopt similar work-arounds. - Reproducibility and debugging information is lost, and common debug info can not be shared or managed by the library provider. - The limitations of Xcode and the need for multi-platform building for both iOS+Simulator (and often Mac OS X) forces developers to deploy technically incorrect complex solutions, such as lipo'ing together device and simulator binaries. - Standard static libraries do not support dylib linker features that are hugely useful when shipping and consuming libraries, such as two-level namespacing, LC_LOAD_DYLIB, etc. Version: Xcode 5.0.3 5A3005 Notes:

iOS Static Libraries Are, Like, Really Bad, And Stuff (Radar 15800975)

12 Jan 2014, 12:33 PSTIntroduction

When I first documented static frameworks as a partial workaround for the lack of shared libraries and frameworks on iOS, it was 2008.

Nearly six years later, we still don't have a solution on-par with Mac OS X frameworks for distributing libraries, and in my experience, this has introduced unnecessary cost and complexity across the entire ecosystem of iOS development. I decided to sit down and write my concerns as a bug report (rdar://15800975), and realized that I'd nearly written an essay (and that I was overflowing Apple's 3000-character limitations on their broken Radar web UI), and that I may as well actually turn it into an actual blog post.

The lack of a clean library distribution format has had a significant, but not always obvious, affect on the iOS development community and norms. I can't help but wonder whether the responsible parties at Apple -- where internal developers aren't subject to the majority of constraints we are -- realize just how much the lack of a clean library distribution mechanism has impacted not just how we share libraries with each other, but also how we write them.

It's been nearly 7 years since the introduction of iPhoneOS. iOS needs real frameworks, and moreover, iOS needs multiple-platform frameworks, with support for bundling Simulator, Device, and Mac binaries -- along with their resources, headers, and related content -- into a single atomic distribution bundle that applications developers can drag and drop into their projects.

The Problems with Static Libraries

From the perspective of someone that has spent nearly 13 years on Mac OS X and iOS (and various UNIXs and Mac OS before that), there are a litany of obvious costs and inefficiencies caused entirely by the lack of support for proper library distribution on iOS.

The limitations stand out in stark relief when compared to Mac OS X's existing framework support, or even other language environments such as Ruby, Python, Java, or Haskell, all of which when compared to the iOS toolchain, provide more consistent, comprehensive mechanisms for building, distributing, and declaring dependencies on common libraries.

Targeting Simulator and Device

When targeting iOS, anyone distributing binary static libraries has had to adopt complicated workarounds to facilitate both adoption and usage by developers. If you look at all the common static libraries for iOS -- PLCrashReporter included -- they've been manually lipo'd from iOS/ARM and Simulator/x86 binaries to create a single static library to simplify linking. Xcode doesn't support this, requiring complex use of multiple targets (often duplicated for each platform), custom build scripts, and more complex development processes that increase the cognitive load for any other developers that might want to build the project.

On top of this, such binaries are technically invalid; Mach-O Universal binaries only encode architecture, not platform, and were there ever to be an ARM-based Mac, or an x86-based iOS device, these libraries would fail to link, as they conflate architecture (arm/x86) with platform (ios/simulator). Despite all that, we hack up our projects and ship these lipo'd binaries anyway, as the alternative is increasing the integration complexity for every single user of our library.

To make this work, library authors have employed a variety of complex work-arounds, such as using duplicated targets for both iOS and Simulator libraries to allow a single Xcode build to produce a lipo'd binary for both targets, driving xcodebuild via external shell scripts and stitching together the results, and employing a variety of 3rd-party "static framework" target templates that attempt to perform the above.

By comparison, Apple has the benefit of both being able to ship independent SDKs for each platform, and having support for finding and automatically using the appropriate SDK built into Xcode. As such, they're free to ship multiple binaries for each supported platform, and any user can simply pass a linker flag, or add the Apple-supplied libraries or frameworks to the appropriate build phase, and expect them to work.

Library Resources

One of the significant features of frameworks on Mac OS X is the ability to bundle resources. This doesn't just include images, nibs, and other visual data, but also bundled helper tools, XPCServices[1], and additional libraries/frameworks that their framework itself depends on.

On iOS, we can rule out XPC services and helper tools; we're not allowed to spawn subprocesses or bundled XPC services, which while arguably made more sense in the era of the 128MB iPhone 1 than it does now, is a subject for another blog post.

However, that leaves the other resource types -- compiled xibs, images, audio files, textures, etc -- the distribution and use of which winds up being far more difficult than it needs to be. On Mac OS X, we can use great APIs like +[NSBundle bundleForClass:] to automatically find the bundle in which our framework class is defined, and use that bundle to load associated resources. Mac OS X users of our frameworks only have to drop our framework into their project, and all the resources will be available and in an easily found location.

On iOS, however, anyone shipping resources to be bundled with their library has had to adopt work-arounds. External resources are often provided as another, independent bundle that must be placed in their application bundle by end-users, increasing the number of steps required to integrate a simple library. Everyone has to write their own resource location code -- it's just a few lines of code to replace the functionality of +[NSBundle bundleForClass:] as an NSBundle category, but they're a few lines of code that shouldn't need to be written, and certainly not by every author of a library containing resources.

This increase in effort -- both on your users, and on you as an author, leads to questioning whether you really need to ship a resource with your library, even if it would be the best technical solution. It changes the value equation, and as such, library authors deem the effort too complex for a simple use case, and instead do more work to either avoid including the resource, programmatically generate it, or in extreme cases, figure out how to bundle the resource into the static library itself, eg, by inclusion as a byte array in a source file.

Meanwhile, when targeting Mac OS X, we've long-since added the resource to the framework bundle and moved on.

Debugging Info and Consistency

One of the great features of dynamic libraries is consistency. Even if two different applications both ship a duplicate copy of the the same version of a shared library, the actual library itself will be the same.

This brings with it a number of advantages when it comes to supporting a library in the wild -- we can trust that, should an issue arise in the library, we as library authors know exactly what tools were used to build it, we have the original debugging symbols available (and ideally, we supplied them with the binary). This gives us a level of assurance that allows us to provide better support with less effort when and if things go wrong.

However, when using static libraries, that level of consistency and information sharing is lost. In the years past, for example, I saw issues related to a specific linker bug that resulted in improper relocation of Mach-O symbols during final linking of the executable, and crashes that thus only occurred in the specific user's application, and could only be reproduced with a specific set of linker input.

If they attempted to reproduce the linker bug with an isolated test case, it would disappear, as the bug itself was dependent on the original linker input. The only way that I could provide assistance was if they sent me their project, including source code, so that I could debug the linker interactions locally. For obvious reasons, most developers can not send their company's source code to an external developer, and the issue generally disappeared forever if they changed the linker input -- eg, by adding or removing a file.

I was never able to get a reproduction case, and I was never able to reproduce the issue locally. For a few years, I'd receive sporadic bug reports about the linker issue appearing, and then disappearing, until finally some update to the Xcode toolchain seemed to have solved the issue, and -- through no change that I made -- the issue disappeared.

Consistency facilitates reliability.

However, there are advantages beyond reliability to having consistent versions of your framework used across all applications. One of the other advantages is transparency, and specifically, transparency when investigating failure.

When a static library is linked into a binary, all the symbols are relocated, linked, and new debugging information is generated -- assuming debugging information was available in the first place: the default 'release' target for static libraries strips out the DWARF data, and if you're shipping a commercial library, you may not want to expose the deep innards of your software by providing every user of your library with a full set of DWARF debugging info.

Given that, even if multiple applications use the same exact version of a library, each and every application build generates build-specific debug information, and in modern toolchains (eg, via LTO), may in fact generate code that constructively differs from the library as shipped. As a library author, you are entirely reliant on whatever debug information was preserved by the user, and in performing post-facto analysis of a crash, you cannot perform deep analysis of your library's machine code without also having access to the user's own application binary, along with the DWARF debugging information that contains not only the debug info for your library, but also that of the end-user's application.

That all assumes that you, as a library author, ship debugging symbols. If you're providing a commercial library for which debugging info must not be provided, then there is no reasonable way to perform post-facto debugging of your library after it has been statically linked into the final application.

By comparison, dynamic libraries and frameworks maintain consistency -- any DWARF dSYM that is preserved by the library author will apply equally to any integration of that version of the library. Commercial library vendors can provide debugging information as necessary post-crash, and as opposed to the symbol stripping that occurs as a link-time optimization when using static libraries, the public symbols of the dynamic library will be visible to the application developer, allowing them introspection into failures even in the case where no debugging info is supplied.

Missing Shared Library Features

Over the course of the decades after which shared libraries were deployed, a variety of features were introduced that solved very real problems related to hiding implementation details, versioning, and otherwise presenting a clean interface to external consumers of the library.

Dependent Libraries

The most obvious example is linking of dependent libraries. When you add a framework to your project, that framework already has encoded the libraries it depends on; simply drop the framework in, and no further changes are required to the list of libraries your project itself links to.

With static libraries, however, it's the application developer's responsibility to add all required libraries in addition to the one they actually want to use. Some companies have gone so far as producing custom integration applications that walk users through the process of configuring their Xcode project just to provide that same level of ease-of-use that you'd get for free from frameworks. Other library implementors have switched to dlopen()'ing their dependencies just to avoid having to deal with user support around configuring linked libraries -- given a linker error showing only the undefined symbols, it's rarely obvious to an unfamiliar application developer what library they should link to fix it.

Even if users should know how to do this successfully, it remains an totally unnecessary burden to place on every single consumer of a library, and forces library authors to reconsider adding new system framework dependencies to their project -- even if it would be the best technical choice -- as it will break the build of every project that upgrades to a new version with that dependency, requiring additional configuration on behalf of the application author, and additional support from the library developer.

Two-level Namespacing

However useful automatic dependent library linking may be, there are much more significant (and much less easily worked-around) features not provided by static libraries -- such as two-level namespacing.

Two-level namespacing is a somewhat unique Apple platform feature -- instead of the linker recording just an external reference to a symbol name, it instead records both the library from which the symbol was referenced (first level of the namespace) AND the symbol name (second level of the namespace).

This is a huge win for compatibility and avoiding exposing internal implementation details that may break the application or other libraries. For example, if my framework internally depends on a linked copy of sqlite3 that includes custom build-time features (as PLDatabase actually does), and your application links against /usr/lib/libsqlite.dylib, there is no symbol conflict. Your application will resolve SQLite symbols in /usr/lib/libsqlite.dylib, and the library will resolve them in its local copy of libsqlite3.

If you're using static libraries, however, two-level namespacing can't work. Since the static library is included in the main executable, both the static library and the main executable both share the same references to the same external symbols.

Without two-level namespacing, internal library dependencies -- such as libc++/libstdc++ -- are exposed to the enclosing application, causing build breakage, incompatibility between multiple static libraries, incompatibilities with enclosing applications, and depending on the library in question, the introduction of subtle failures or bugs. This requires work-arounds on behalf of the library author -- in libraries such as PLCrashReporter, where a minimal amount of C++ is used internally, this has resulted in our careful avoidance of any features that would require linking the C++ stdlib. This is not an approach that would work for a project making use of more substantial C++ features, and the result is that library authors must either provide two versions of their library, one using libc++, one using libstdc++, or all clients of that library must switch C++ stdlibs in lockstep - even if they neither expose nor rely on C++ APIs externally.

Symbol Visibility

One of the features that is possible to achieve with static libraries is the management of symbol visibility. For example, PLCrashReporter ships with an embedded copy of the protobuf-c library. To avoid conflict with applications and libraries that also use protobuf-c, we rely on symbol visibility to hide the symbols entirely (though, if we had two-level namespaces, we could have avoided the problem in the first place).

To export only a limited set of symbols, we can use linker support for generating MH_OBJECT files from static libraries. This is called "single object pre-link" in Xcode, and uses ld(1)'s '-r' flag. Unfortunately, MH_OBJECT is not used by Xcode's static library templates by default, is seemingly rarely used inside of Apple, and has exhibited a wide variety of bugs. For example, a recent update to the Xcode toolchain introduced a bug in MH_OBJECT linking; when used in combination with -exported_symbols_list, the linker generates an invalid __LINKEDIT vmsize in the resulting Mach-O file (rdar://15042905 -- reported in September 2013).

This highlights a major issue with static libraries: Apple doesn't use them the way we do. Things that aren't used largely aren't tested, with a high tendency towards bitrot and regression; unusual linker flags are no exception.

Conclusion

Above, I've listed a litany of issues that I've seen with actually producing and maintaining static libraries for iOS, and their deficiencies compared to a solution that NeXT was shipping a form of nearly 30 years ago. My list of issues is hardly exhaustive -- I didn't even mention ld's stripping of Objective-C categories, -all_load, and the work-arounds people have employed.

However, all technical issues aside, what's worse are the effects that these technical constraints have had on the culture of code sharing on the platform. The headaches involved in shipping binary libraries has contributed to most people not trying. Instead, we've adopted hacks and workarounds that create both technical debt and greatly constrain the power of tools we can bring to bear on problems. Since static libraries are so painful, instead, people gravitate towards solutions that, while technically sub-optimal, are imminently pragmatic:

- Drop-in source files that you're expected to include in your project, or

- Xcode projects that one must include as a subproject, or

- Shipping xcconfig files that the users must manually include to set the right configuration for a binary and/or subproject library

These workarounds have introduced a number of problems for the development community; the majority of my support requests have nothing to do with actually *using* my libraries. Rather, users get stuck trying to integrate them at all -- as a subproject, or trying to embed the source files, or all of the above.

Developers are regularly frustrated by projects that can't easily be integrated via source code drops, or have complicated sets of targets that are required when attempting to embed a subproject -- but if if it were not for the limitations of iOS library distribution, the internals of our library build systems need not be exposed at all to developers.

For library authors, all of these integration hacks -- embedding source code, reproducing project configuration, hacking projects into use -- result in builds of the library being unique -- not corresponding to a specific known release, they make support and maintenance all the more difficult.

In response to these issues, a variety of cultural shifts have occurred. Tools like CocoaPods automate the extraction of source code from a library project, generate custom Xcode workspaces and project files, and reproduce the build configuration from the library project in the resulting workspace. Through this complex and often fragile mechanism, users can integrate library code in a way that begins to approach the simple atomic integration of a framework, but at the cost of ideally unnecessary user-facing additions to their project's build environment, significant fragility around the process, and not insignificant overhead for library authors themselves.

Outside of CocoaPods, the unnecessary complexity of distribution and packaging libraries for iOS has in no small part resulted in a decrease in the availability of easily integrated and well-designed frameworks. This is no surprise, as a significant, discouraging amount of effort is required to produce something on par with what was easily done with frameworks, and in doing so, one often has to introduce duplicated targets, complex scripts, and other work-arounds that are both time consuming to implement and maintain, and make the project inhospitable to potential contributors.

Apple’s lack of priority on solving the problem of 3rd-party iOS library distribution has taken a real toll on the community’s ability to produce documented, easily integrated, consistently versioned libraries with reasonable effort; most people seemingly don't even try outside of CocoaPods, whereas this was the norm on Mac OS X.

For those of us outside of Apple, limited to only what is permitted by Apple for 3rd-party developers, I believe that this stance has been damaging to the cultural of software craftsmanship by introducing significant discouraging costs and inefficiencies when trying to produce consistent, transparent, easily integrated high-quality libraries.

Nearly 7 years after the introduction of iOS, it well past time for Apple to prioritize closing the gap between iOS and Mac toolchains. A real framework solution is not the only improvement we need to see to the development environment, and certainly not the most important one, but it plays a central role in how we as third-party developers can share and deliver common code.

[1] Technically, only system frameworks can currently embed XPCServices on Mac OS X. Mentioned in rdar://15800873.

Update: On request (and in the spirit of Fix Radar or GTFO), I've posted a template you can use to submit a Radar to Apple about this issue. Please consider gritting your teeth and using Radar Web to submit a bug.

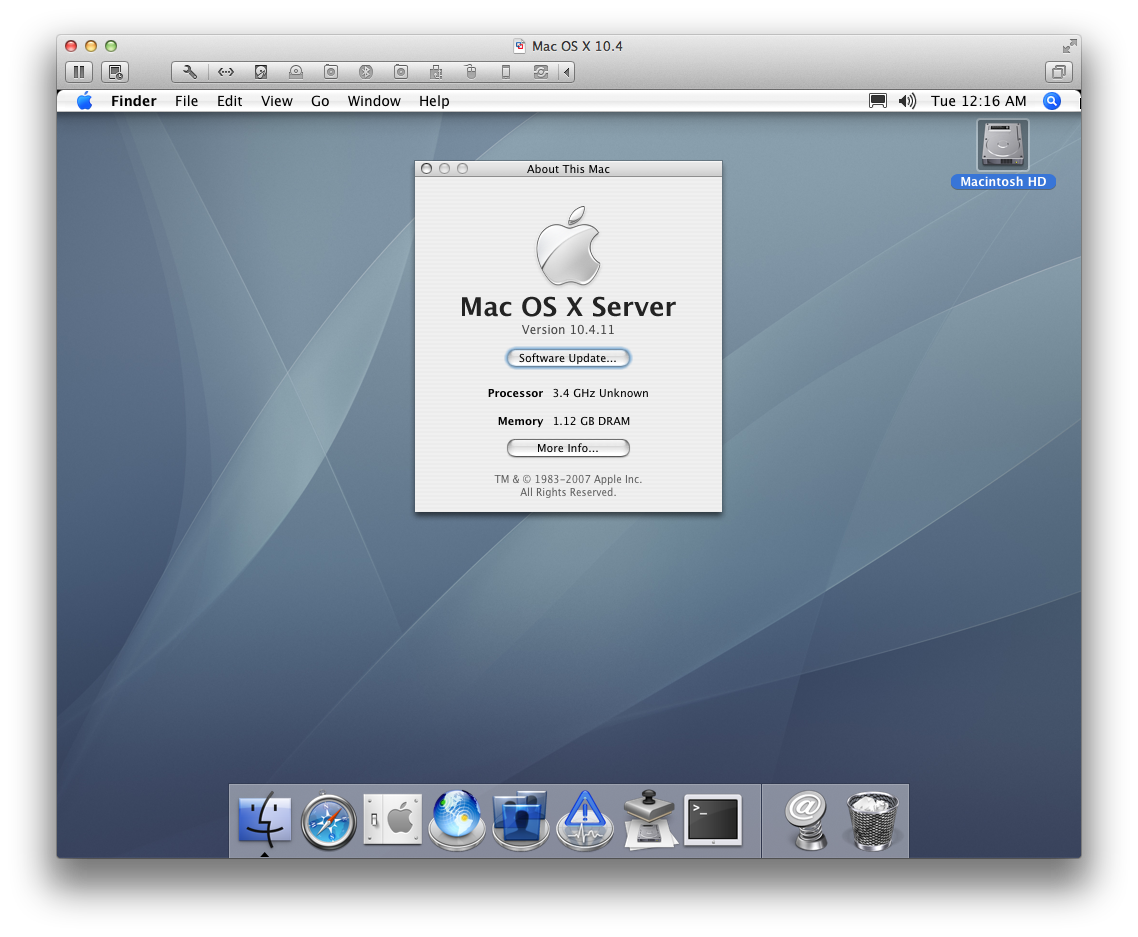

Mac OS X 10.4 under VMware Fusion on Modern CPUs

17 Dec 2013, 09:38 PSTRunning 10.4.11 under VMWare Fusion 6

Introduction

I recently agreed to port a small piece of code to Tiger (10.4), assuming that it would be relatively easy to bring up Tiger up in VMWare Fusion on my Mavericks desktop; I already keep VMs for all of the other Mac OS X releases starting from 10.6.

As it turns out, it wasn't so easy; after downloading a copy of 10.4 Server from the Apple Developer site, I found that the installer DVD would panic immediately on boot. Apparently Tiger uses uses the older CPUID[2] mechanism for fetching processor cache and TLB information (see xnu's set_intel_cache_info() and page 3-171 of the Intel® 64 and IA-32 Architectures Software Developer’s Manual, Volume 2).

Virtualized systems query the hosts' real CPUID data to acquire basic information on supported CPU futures; newer processors -- mine included -- do not include cache descriptor information in CPUID[2]. Unable to read CPUID[2], the Tiger kernel left the cache line size initialized to 0 in set_intel_cache_info(), and then panicked immediately thereafter when initializing the commpage and checking for (and not finding) a required 64 byte cache line size.

In theory, VMware Fusion has support for masking CPUID values for compatibility with the guest OS by editing the .vmx configuration by hand, but I wasn't able to get any cpuid mask values to actually apply, so I eventually settled for binary patching the Tiger kernel to force proper initialization of the cache_linesize value.

If you find yourself needing to run Tiger for whatever reason, I've packaged up my patch as a small command line tool. It successfully applies against the 10.4.7 and 10.4.11 kernels that I tried. While you should run it against /mach_kernel after mounting the Tiger file system under your host OS, I found that tool worked just fine just fine when run directly against my raw VMware disk image file, as well as against the Mac OS X Server 10.4.7 installation DMG.

Using the tool, I was able to install 10.4.7, upgrade to 10.4.11, and then patch the 10.4.11 VMDK to allow booting into the upgraded system.

Source Code

You can download the source here. Obviously, this is totally unsupported and could destroy all life as we know it, or just your Tiger VM; use at your own risk.

Keychain, CAs, and OpenSSL

14 May 2013, 09:39 PDTIt took me almost 13 years, but I finally sat down and solved a problem that has annoyed me since Mac OS X 10 Public Beta: synchronizing OpenSSL's trusted certificate authorities with Mac OS X's trusted certificate authorities.

The issue is simple enough; certificate authorities are used to verify the signatures on x509 certificates presented by servers when you connect over SSL/TLS. Mac OS X's SSL APIs have one set of certificates, OpenSSL has another set, and OpenSSL doesn't ship any by default.

The result of this certificate bifurcation:

- Any app using SSL (curl, svn, git) will fail to validate remote certificates unless you manually add all of the CA certificates to OpenSSL's certificate store.

- If you use a custom CA (eg, to sign certificates for your internal services), you'll need to add the CA certificate to OpenSSL's certificate store.

Given that computers can automate the mundane, I finally put aside some time to write certsync (permanent viewvc).

It's a simple, single-source-file utility that reads all trusted CAs via Mac OS X's keychain API, and then spits them out as an OpenSSL PEM file. If you pass it the -s flag, it will continue to run after the first export, automatically regenerating the output file when the system trust settings or certificate authorities are modified.

I wrote the utility for use in MacPorts; it's currently available as an experimental port. If you'd like to test it out, it can be installed via:

port -f deactivate curl-ca-bundle port install certsync port load certsync # If you want the CA certificates to be updated automatically

Note that forcibly deactivating ports is not generally a good idea, but you can easily restore your installation back to the expected configuration via:

port uninstall certsync port activate curl-ca-bundle

Or, you can build and run it manually:

clang -mmacosx-version-min=10.6 certsync.m -o certsync -framework Foundation -framework Security -framework CoreServices ./certsync -s -o /System/Library/OpenSSL/cert.pem

I also plan to investigate support for Java JKS truststore export (preferably before 2026).